These days it’s very important for a site have a decent performance. For visitors as well as to reduce server costs for google 😉 … Improving performance becomes a hard task when a website has a lot of machinery and complex parts from the path. In this article I will explain the low hanging fruit we could harvest. We also moved to Akamai to make use of a cdn since we serve the larger part of Europe.

Keep in mind that this process is based on our main site but it could be useful for other sites as well.

JS, Try to decrease the javascript loaded

The main issue with lots of JavaScript is that it needs to be parsed, which is an issue for mid, and lower-end smartphones.

We took the following steps

- Gaining insight

- Removing unneeded javascript

- have a discussion with marketing, about the marketing scripts being loaded by Google Tag Manager.

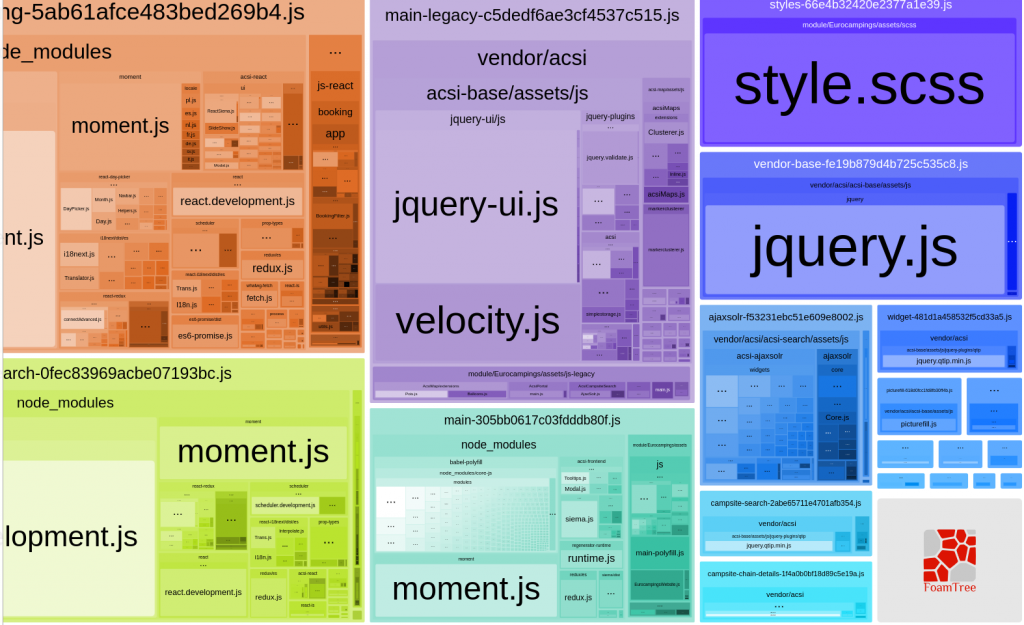

- Create a division in transpiled bundles, one for legacy browsers and one for modern browsers

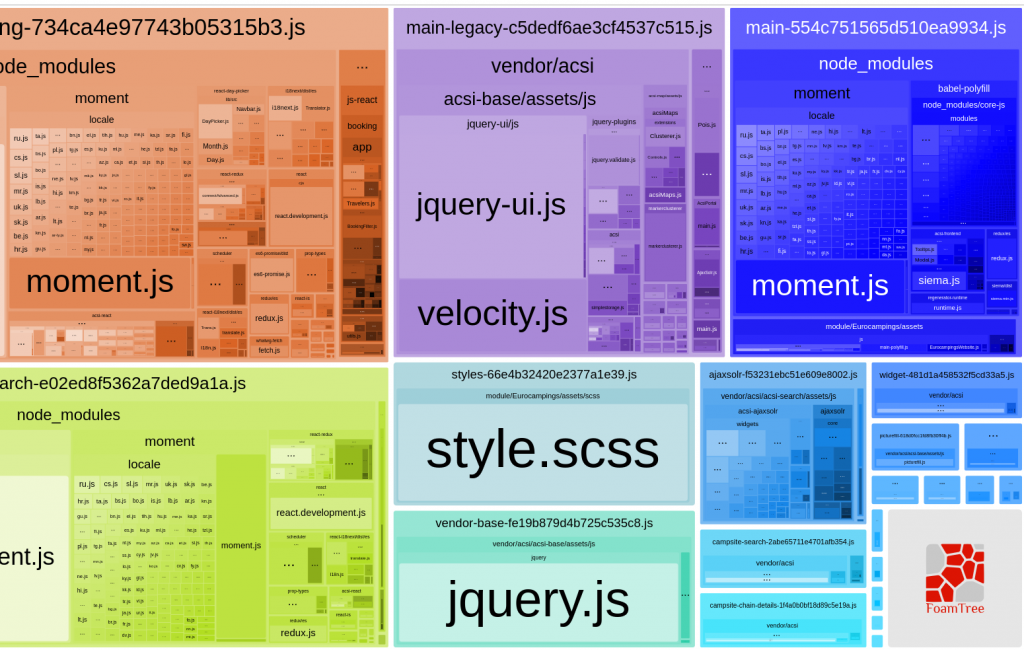

First we needed some insights luckily there is a convenient package for that `webpack-bundle-analyzer` once installed I created an npm task to run webpack and analyze the results right away.

"webpack-analyze": "webpack --env.NODE_ENV=develop --profile --json > module/Eurocampings/analysis/stats.json && webpack-bundle-analyzer module/Eurocampings/analysis/stats.json"This task runs webpack with some statistics flags which generates the output to JSON. After that the webpack-bundle-analyzer does the crunching en presents us with a nice Mondriaanesque webpage like the image below.

First obvious thing for us was to remove the moment locales, which we done in webpack with the IgnorePlugin

const ignorePlugin = new webpack.IgnorePlugin({

resourceRegExp: /^\.\/locale$/,

contextRegExp: /moment$/

});This removed all the locales in the bundle, we still use several locales which we import directly in the modules. For us this already saved some hundreds kbs.

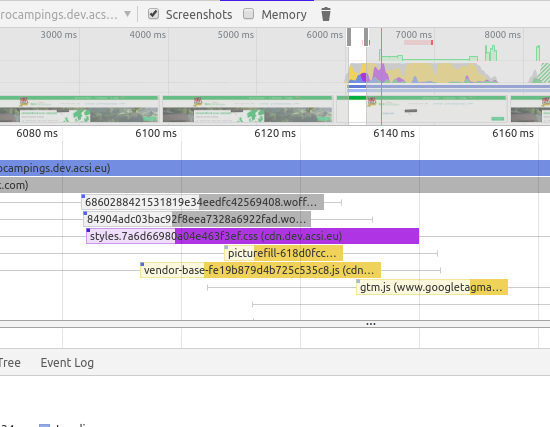

For the Google Tag manager we first tested what the performance increase would be. It turned out the the increase was significant. Since we cannot market our site properly without GTM we need to discuss with the business which things can be done with GTM to improve the performance of the site.

For the separate bundling of the JavaScript resource we need to decide which versions of browsers we still would like to support. The gain is this process will be the faster parsing for already modern browser. We need to calculate the business value before we will take this route.

CSS, remove unneeded things

- Removing unneeded CSS

- Check for mixins which generate lots of extra CSS

- Remove vendor prefixes and if needed use postCSS and AutoPrefixrCritical rendering, inline CSS

Critical rendering, inline CSS

The principle of inline css is actually simple, use an npm package which uses a headless browser to scan a local, of live html-page, restricted by a width and a height. The classnames eg of the elements in the box are used to extract the styles to be inlined in the page. To be used to improve critical rendering.

For our situation, many dynamic pages, we needed to find an automated solution. We also posed the question, should we somehow generate one generic inline CSS blob, or should each page has its own inline css sections. The latter is more accurate but requires more processing time, and we need to scan every possible page.

We went for the following solution.

- Scan the most important pages

- Combine the extracted CSS into 1 chuck of inline CSS

- Insert the inline CSS on all the pages

We use https://github.com/pocketjoso/penthouse this gives us a more low level critical css module which helps us to fetch the styles from several pages and combine them into one critical css file.

Other assets

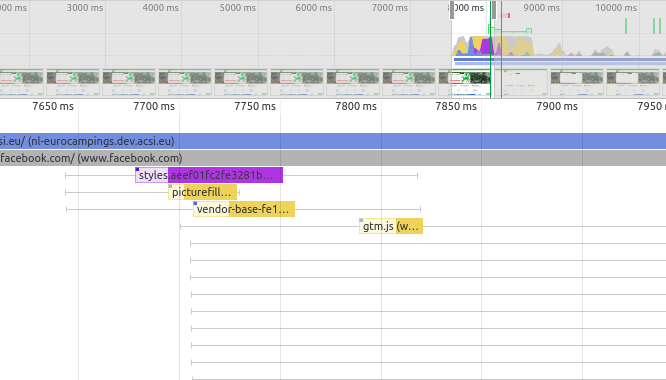

For the other assets we sure had something to optimize for the other assets. Font were high on the list and easy to fix.

The font preloading was actually very simple, we made the decision to only preload the woff2 fonts and have the woff format as fallback for IE11 which we still support but luckily the usage is slowly decreasing. We use an assethelper which reads a manifest.json generated by webpack. Since we do not require the font we needed to explicitly bundle the font files like this

const fontStagMedium = './module/Eurocampings/assets/fonts/stag-medium-webfont.woff2';

const fontStagLight = './module/Eurocampings/assets/fonts/stag-light-webfont.woff2';

entry: {

stagMedium: path.resolve(__dirname, fontStagMedium),

stagLight: path.resolve(__dirname, fontStagLight),Now we could add the fonts to the head section to preload the fonts

<link rel="preload" href="<?php echo $this->assetPacker('stag-medium-webfont.woff2'); ?>" as="font" type="font/woff2" crossorigin>

<link rel="preload" href="<?php echo $this->assetPacker('stag-light-webfont.woff2'); ?>" as="font" type="font/woff2" crossorigin>

Wrap up

The business

We experienced that it is necessary to inform the business that with a large and complicated site: there are no silver bullets. You have to improve the site (lighthouse) point by point. Be transparent about the technical cost of each step. Sometimes you can combine the work to be made to improve the performance with some refactoring which helps to sell the step.

Things left to improve

One of the hardest parts we currently deal with is that our site has been out for a lot of years and have a lot of stakeholders. The result is that we have a lot…. A lot of HTML, JS and CSS. We pointed this to the business a lot of times, the sites needs focus and afterwards we can strip things out. Turns that this is not easy. We decided to improve our user measurements and notice the business of parts never being used that need to be striped out. And of course we need to implement some functionality of service workers to help with the clientside caching especially for mobile users.

Current improvements

We still have work to do to improve the scores even further, hence our current measurements with lighthouse and pagespeed show an improvement of at least 200%. We are still performing mediocre on mobile. Luckily we know which step we need to take.